Least Squares¶

In this lecture, we will cover least squares for data fitting, linear systems, properties of least squares and QR factorization.

Least squares for data fitting¶

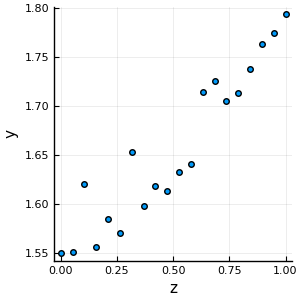

Consider the problem of fitting a line to observations y_i gven input z_i for i = 1,\dots, n.

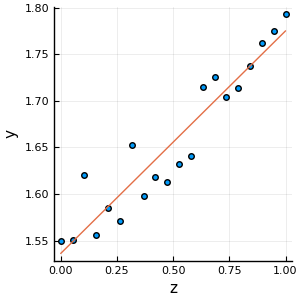

In the figure above, the data points seem to follow a linear trend. One way to find the parameters c,s \in \R of the linear model f(z) = s\cdot z + c that coresponds to a line of best fit is to minimize the following squared distance subject to a linear constraint:

The above minimization program can be reformulated as a linear least squares problem:

where

Let

The gradient and hessian of \func{f}(\vx) are:

respectively. The Hessian is positive semidefinite for every \vx (and is positive definite if \mA has full row rank). This implies that the function \func{f}(\vx) is convex. Additionally, \vx = \vx^{*} is a critical point if

Since \func{f}(\vx) is convex, \vx^{*} is a global minimizer. Equation \eqref{least_square_normal_eqn} is called the normal equations of the least squares problem \eqref{least_squares_problem}. Solving the normal equations, we get the following line of best fit.

Linear systems¶

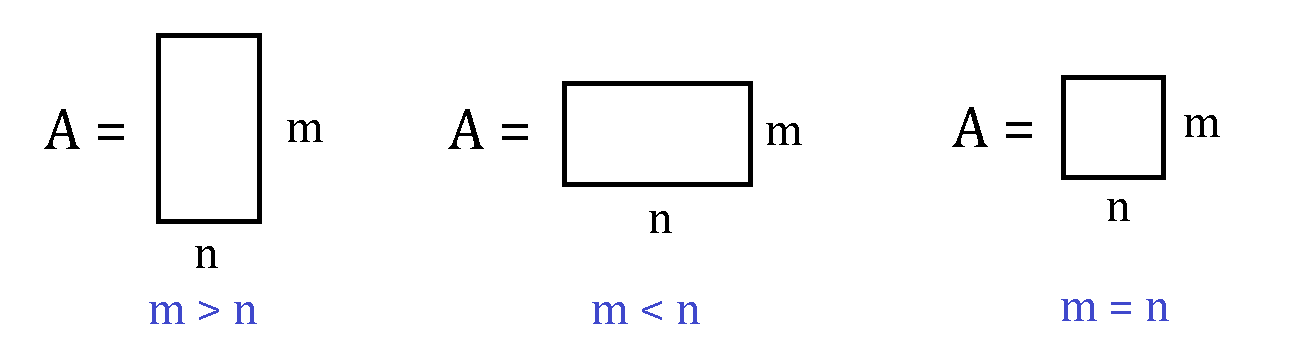

Consider the problem of solving a linear system of equations. For \mA \in \R^{m\times n} and \vb\in\R^{m}, the linear system of equations \mA\vx = \vb is:

- overdetermined if m>n,

- underdetermined if m< n, or

- square if m = n.

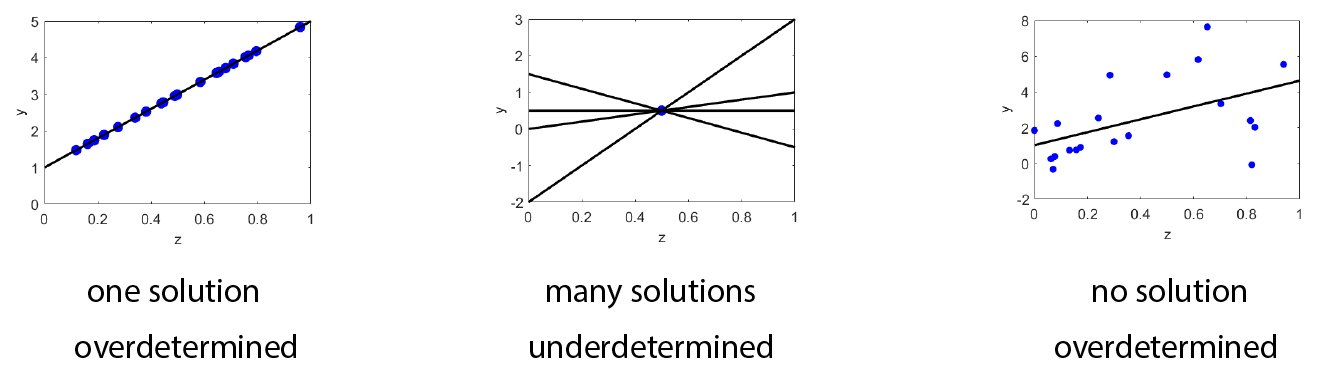

A linear system can have exactly one solution, many solutions, or no solutions:

In general, a linear system \mA\vx=\vb has a solution if \vb \in \text{range} (\mA).

Properties of linear least squares¶

Recall that the minimizer \vx^* to the linear least squares poblem satisfies the normal equations:

with the residual

satisfying \mA^\intercal\vr^* = \vzero. Here, \mA \in \R^{m\times n}. The minimzer of the linear least squares problem is unique if \mA^\intercal\mA is invertible. However, the vector in the range of \mA closest to \vb is unique, i.e. \vb^* = \mA\vx* is unique. Recall that range space of \mA and the null space of \mA^\intercal is:

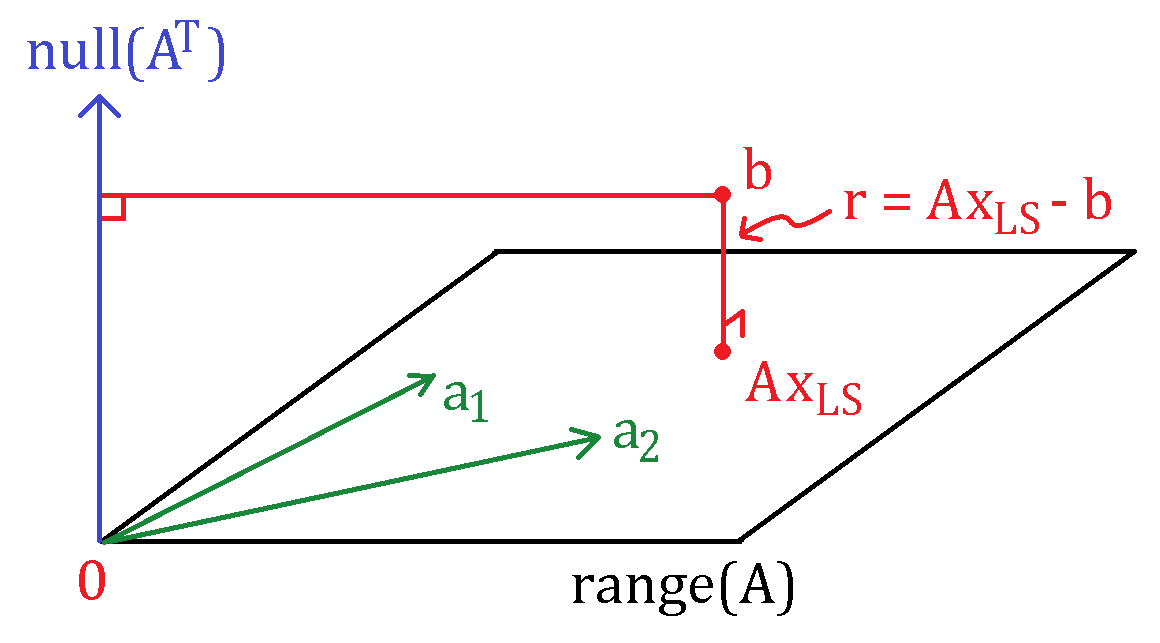

By fundamental theorem of linear algebra, we have $$ \begin{equation}\label{least_squares_FTLA} \set{R}(\mA) \oplus \set{N}(\mA^\intercal) = \R^m. \end{equation} $$ Thus, for all \vx \in \R^m, we have

with \vu and \vv uniquely determined. This is illustrated in the figure below:

Here, \vx_{LS} is the least squares solution, \mA = \begin{bmatrix}\va_1&\va_2&\dots&\va_n\end{bmatrix}, with \va_i \in \R^m for all i. Comparing with \eqref{least_squares_FTLA}, we get

\exa{1} What is the least-squares solution \vx^* for the problem

where

\text{Solution:} First setup the normal equations:

Solving the normal equations, we get

So, the least squares solution \vx^* is the mean value of the elements in \vb.